In this part I will continue to suggest promising ideas to Dr. Levin, who proposes radical approaches to push forward research in frontier areas of biology and medicine. The first part of this series is here - Fly Hitting a Window.

Modularity vs Micromanagement

"Agents at higher levels (e.g., organs) deform the energy landscape of actions for lower levels’ (e.g., cells or subcellular machinery). This enables the lower systems to “merely go down energy gradients” – to perform their tasks with minimal cognitive capacity, while at the same time serving the needs of the higher-level system which has exerted energy (via rewards and other actions) to shape its parts’ geodesics to be compatible with its own goals. This is a very powerful aspect of multi-scale competency because the larger system doesn’t need to micromanage the actions of the lower levels – once the geodesics are set, the system can depend on the lower levels to do what they do best"

Competency in Navigating Arbitrary Spaces:

Intelligence as an Invariant for Analyzing Cognition in Diverse Embodiments

by Chris Fields and Michael Levin

First, gradients. I just want to reiterate the importance of ranges over point-accurate measurements as mentioned in the first part of this series. This is directly related to insanity. According to Einstein, "Insanity is doing the same thing repeatedly and expecting different results." Besides using words "same" and "different" (confirming that comparison is important for cognition - the opposite of insanity), it raises an important issue of mapping inputs to outputs with respect to actions. You can agree with me that it is not the whole object that affects the result of an action. For example, there are many properties of tea leaves irrelevant to the taste of tea (I have a post Cup of Tea dedicated to how input parameters affect an action's results).

With respect to relevant properties, we are interested in their ranges. How do we determine the boundaries of those ranges? This question is often raised in Dr. Levin's works, maybe in a different form. They are not random or dictated by a researcher. They are determined by a classical scientific method. Change one parameter, keeping others fixed, and observe the effect. Note dimensionality reduction. Differences in results determine differences in input parameters. Again, not point-accurate measurements, but ranges.

Second, rewards. Reinforcement Learning (RL) in my opinion is a flawed approach. In real time we do not have time to calculate rewards before choosing among multiple options. Neither do we have time even to generate a list of possible scenarios or predict their results. Real time is a tough constraint.

From the above, we know mappings of inputs to outputs per action. Those mappings already embed "rewards," or more accurately the results of actions. RL has it backwards. It tries to calculate the "reward" of a result, while agents are interested in the result itself.

"the continued development of triggers and top-down prompts as alternative strategies to molecular micromanagement for reaching complex outcomes of growth and form."

Machines All the Way Up and Cognition All the Way Down:

Updating the machine metaphor in biology

by Michael Levin and Richard Watson

Now let's discuss triggers and prompts. We need to differentiate our goals, processes that lead to them, and what initiates or controls those processes. In short, it is about time duration. For example, in experiments with planaria, where the number of heads was regulated by bioelectricity, I think it was mentioned that the duration was quite long. In other words, it was not a signal, but rather a long-lasting constraint.

So, we are better served by the system approach and the effect of constraints, the latter playing the role of a mold to shape the result.

Actions

"An agent promotes (or pursues) a goal by taking action(s) to achieve it, regardless of

whether the agent obtains the goal or even can obtain it."

Technological Approach to Mind Everywhere:

A Framework for Conceptualizing Goal-Directedness in Biology and Other Domains

by Michael Levin and David B. Resnik

Goals can be represented as differences in properties and actions affect properties. In turn, actions have their prerequisites, parameters, constraints, etc. Just as we can construct a 20 Questions decision tree for categorization, we can do the same for the results of each action. Having such a tree and observing opportunities and constraints in a current context, we can determine what result to expect. The tree data structure enables fast O(logN) performance suitable for real-time situations.

Errors?

"In cell behavior and morphogenesis we first encounter the concept of error; chemistry does not make mistakes, but cells can misperceive and embryonic development, regeneration, or cancer suppression can certainly make mistakes"

Machines All the Way Up and Cognition All the Way Down:

Updating the machine metaphor in biology

by Michael Levin and Richard Watson

Let me suggest that it is an error to assume that there are errors. There are results of actions and there is a mapping of inputs to outputs. There are opposing actions from the environment or competitors. There are reasons for every result we observe. If they are different from our desires or expectations, it's a problem of our inadequate experience and knowledge. It means that we have gaps in our 20 Questions trees or that we did not learn our lessons so generously taught by the game 20 Questions.

Lessons from 20 Questions

I provide these in my post Intelligence and Language, but I believe it is worth to reiterate them in short here.

Intelligence leaves hints about its mechanisms. The game 20 Questions is one such hint. I consider this game the most important for cognitive scientists. It fascinated Charles Sanders Peirce who was so occupied with rigorous logic that he missed the full potential of that game. I believe that intelligence does not rely on rigorous logic. Allen Newell claimed that "You can't play 20 questions with nature and win." I claim that we do play and do win.

1. After each answer we have two new concepts. Each is defined not by the similarities of its instances but by the differences from its sibling concept.

2. Think about atomic concepts. Apples are different from peaches, from fruits, from hammers, from galaxies, from democracy. But it looks like there are differences to differences. Concepts cannot be independent. With respect to concepts, everything is recognized in comparison. Because of that, concepts should not be considered in isolation. Always take into account a parent category, the defining feature, and a sibling category. Intelligence leaves hints. Please pay attention to how dictionaries define words - C is P but with D (concept is parent with difference (from other parent instances that fall into the sibling category)). In fact, there may be many sibling categories - it is not "red" and "non-red" colors.

3. What is a category? It is an important question. Many consider that categories stand for objects. It is not so. Any category is defined by a set of defining features (answers in the game 20 Questions) but that set does not exhaust the object's properties - there are many more features. Some features are immediate - we can ask that category about them and differentiate further. Some features are distant, for example, we cannot ask Tangible about taste, for that we need to go down to Edible.

4. The game 20 Questions relies on the specialization procedure, introducing differences and moving down the tree. For example, it breaks the Fruit category into multiple categories - Apples, Peaches, etc. What happens when we consider Apples and Peaches and decide to ignore the differences between them? We will generalize to the Fruit category. Consider the Furniture category. We may break it down by the Function property or by the Material property or by the Color property. By selecting the property differences in which to ignore, we may generalize differently.

5. Consider now the general algorithm of the game. It starts with a set of possible categories. It proceeds by filtering the set on the basis of properties of a given object (the game can be adapted to actions or anything else worth categorization). It stops at a desired level. We may generalize this algorithm to any cognitive function or task.

6. Given the tree, which we can traverse up (generalization) and down (specialization), we can add rules at each category level and exceptions at subcategory levels.

Knowledge

"I don’t want emergence to just mean “things that surprise us”, as if labeling it as such provided some sort of advance, because that does not facilitate finding (and exploiting) the next amazing emergent pattern. I would rather start with the presumption that these kinds of patterns form an ordered space, with a metric that enables systematic, rational investigation – a research program that facilitates discovery. We need to understand the contents and structure of that space, and the ways in which the objects we build can pull down desired (and undesired) patterns from that space."

Platonic space: where cognitive and morphological patterns come from (besides genetics and environment) - from thoughtforms.life

by Michael Levin

I wholeheartedly endorse this quote!

We are after reliable recipes for achieving our goals. For that we need to rely on a reasonable research, not on the mysterian emergence. I believe that the above proposed 20 Questions tree, applicable to categorization, actions, etc., will be a good foundational data structure for the research program envisioned by Dr. Levin.

Fill in the Blanks

I call the process of the game 20 Questions the Semantic Binary Search. It is fast and meaningful. It guides the discovery of knowledge if we observe any gaps in the tree.

Also it enables knowledge transfer between domains. The same properties may be used in different domains. If they are well explored in one then it is natural to apply what we know about them in a new domain as a "seed". If any observations call for updating the mappings, it is easy to do.

Memory

The following quotes are from Machines All the Way Up and Cognition All the Way Down: Updating the machine metaphor in biology by Michael Levin and Richard Watson:

"None of us has access to the past; what we have access to are the memory engrams left in our brains and bodies by past experience – memories from our past Selves. These must be actively interpreted, not merely read out, and our cognitive system is not trying to interpret them precisely in their original form, but to continuously shape a dynamic, malleable story as required to drive behaviour in the current situation. For example, memories formed in the caterpillar persist to the butterfly despite the total refactoring of the brain during metamorphosis. However, the key feature here is not the ability to maintain information despite a drastically remodeling substrate, but the ability to re-map and re-interpret those memories. The precise memories (linking stimulus to motile behavior that results in food) of the caterpillar are useless in a butterfly body, which has vision, motion control, and food requirements totally different from its prior embodiment. What makes it into the new body, living in a higher-dimensional space, are not the details of the prior life but the deep lessons – this requires generalization and a remapping of information toward a new meaning."

"information is lost by the algorithmic compression and must be creatively un-folded into morphogenetic or behavioral outputs (confabulated specifics)"

"Suppose that cognitive confabulation is not the exception, it’s the rule; i.e. there is no such thing as precise storage and recall of brain states, there is only dynamic, context-sensitive improvisation of the ‘clues’ that are left in mental engrams."

I have a separate post about Memory, and indeed, there is evidence that our memory is unreliable in storing fine details. Let's return back to categories in my model - they are the defining features only. If only defining features are stored then all the other features may be stored only if they are relevant for other purposes. Essentially our intelligence is highly efficient about the value over cost ratio when it comes to memory. Our specialization/generalization trees store only relevant features and connections. If we believe in amulets we surely store them too. But if they prove inefficient, their place may be reclaimed for something else.

A brief remark about the caterpillar/butterfly case. A butterfly keeps track of the caterpillar memories not for the use by the butterfly itself but to pass that memory to future caterpillars who in turn will have knowledge about what it's like to be a butterfly. The circle of life.

A brief remark about compression. In that respect memory resembles language, which according to my theory does not transfer information, be it compressed or not. Sounds surprising? Language is an intelligent tool for communication between intelligent agents. What language does is it passes a filter, which the other party applies to the context, figuring out relevant objects. This way they get to the source of information, where their perception collects it. Language is an advance form of a pointing finger. A perfect example of this is the phrase, "Take a seat!" It doesn't encode the location of a chair, right? But anyone can perform that request.

Memory may play by the same rules. It stores only the defining features of relevant pieces - be it for recognizing a situation or for figuring out relevant causes and effects. Encountering a novel situation, the one where we witness unexpected results of some actions or encounter unseen before objects or their combinations, we store more details to be able to later figure out the relevant element (defining features) that will help us in the future.

Memory uses not only the trees described above but also a "key-key" storage schema, where any key may be used both to retrieve other information or to be retrieved as information. It is like a declarative sentence with many constituents. Any of them can be quiried for, while the others serve as keys.

One important piece to this puzzle is the multimodality of memory. We store properties separately. How do we then combine those separate parts into the whole object again? By ID. I hypothesize that issuing such IDs is the job of the hippocampus. Compare that idea to time stamps and geolocation tags on photos taken by your smartphone.

Algorithm

Now we are ready to understand the core algorithm of cognition - selection of the most fitting option from the available ones respecting the relevant constraints.

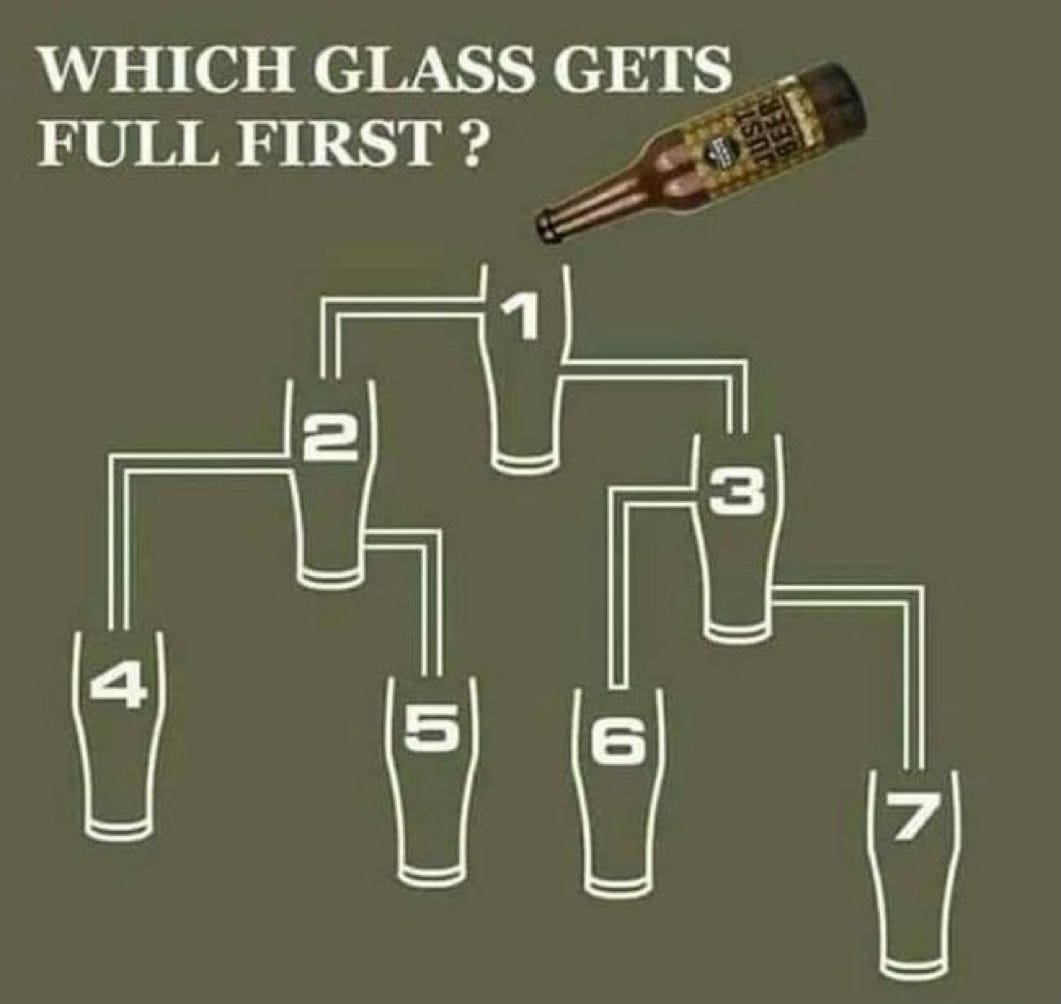

Consider this picture as a funny illustration. Pay attention to "options" and "constraints.”

"Understanding how agents of all kinds, from evolved natural forms to bioengineered creations, solve problems is of high importance on several fronts."

Competency in Navigating Arbitrary Spaces: Intelligence as an Invariant for

Analyzing Cognition in Diverse Embodiments

by Chris Fields and Michael Levin

"The act of navigating spaces involves several fundamental components. First, the inverse problem: which effectors to activate, to get to a preferred region of state space? It frames most aspects of survival as fundamentally a search, with different degrees of capacity to look into the future. Second, it is greatly potentiated by the ability to maintain a record of the past, i.e. a reliable, readable memory. The central question faced by any system is: what to do next among choices? Thus, it is important to begin to formalize a notion of decision-making in a deterministic system. No finite agent can discover all of the causal influences that determine its own behavior; global determinism logically disallows local determinism."

"We foresee great promise in the application of the mathematical framework of category theory"

"The failure of theories modeling human decision making on what human agents can consciously report about their thought processes makes the need for a general theory evident. The mathematical theory of active inference as Bayesian satisficing provides a scale-free framework for understanding this process."

"the task of minimizing prediction error, and hence minimizing VFE, becomes the task of predicting"

"For any biological system, the number N is enormous, and the complexity of a predictive (generative) model of an N-dimensional space increases combinatorially with N. Hence organisms cannot be expected to implement full, predictive models of the Hilbert spaces of their MBs. Indeed, a model of the full Hilbert spaces of the MB is impossible in principle."

Surely I discard both the VFE Principle and Bayesian inference as unreliable and computationally infeasible. Instead I propose to use the Semantic Binary Search - efficient and meaningful approach, which will serve the needs of research perfectly.

Not only it clarifies what categories are and streamlines the requests for required developments of the category theory, but it also provides clear instructions on how to enrich our knowledge and how to use it efficiently.

Let's consider the example with planaria and Barium Chloride:

"they then regenerate a new head which is barium-insensitive (in a time frame far too fast for a trial and error search through the space of possible gene expression combinations). Transcriptional comparison between original and barium-adapted heads revealed a small number of genes which were induced in order to deal with a stressor that the planarian lineage was likely never exposed to in its evolutionary history. In a space of several tens of thousands of possible actions (up- and down-regulation of specific genes), cells rapidly identified and deployed those transcriptional effectors that resolved a novel physiological stressor, showing the ability to map between transcriptional and physiological space and to improve novel solutions. This suggests it is not a trial and error process (certainly not one on evolutionary timescales), nor is it likely to be a ‘canned’ solution found from previous experience and later recalled. Instead, what is indicated is an informed problem-solving process that can actively identify the changes in genetic activity that are required to accommodate to this novel stress."

Machines All the Way Up and Cognition All the Way Down:

Updating the machine metaphor in biology

by Michael Levin and Richard Watson

Regarding "likely never exposed to in its evolutionary history", it's an immediate No from me. The speed and reliability of the process indicates that it was a direct traversal of the respective tree by the provided key. The evolutionary history of planaria contains not only the history in the form of planaria but also of ancestors up to bacteria. Even if it was not specifically Barium Chloride, they definitely encountered and remembered something from the respected range (of some chemical property).

"a small number of genes" out of "several tens of thousands" are much more interesting. Consider building a house. There are several actions - "Place a roof," "Install doors and windows," "Erect walls," "Prepare foundation," etc. All are retrieved by the key "Build a house." But in what order to perform them? Actions have prerequisites and results - the basic analysis allows us to determine the correct order. Most likely something similar happens in this case. Note that genes here are viewed like "skill mini-programs," but they are not the ones performing the core algorithm of selecting "a small number of genes" out of "several tens of thousands". Intelligence in planaria is somewhere else. Not in the head obviously. I don't know where.

***

The core algorithm of cognition is about selecting a proper behavior fast. It will suggest a meaningful action, which will move the needle. But please note that it does not provide any guarantees. It is not about "reaching" a goal, rather it is about "getting closer" to it.

In the next part I will propose several applications of this theory to various areas. Those are only speculations. But my goal is not to become an author of the theory of intelligence, but to push the research forward for humans to finally develop one.